Automatic speech recognition (ASR) is becoming a core building block for AI products, from meeting tools to voice agents. Mistral’s new Voxtral Transcribe 2 family targets this space with 2 models that split cleanly into batch and realtime use cases, while keeping cost, latency, and deployment constraints in focus.

The release includes:

- Voxtral Mini Transcribe V2 for batch transcription with diarization.

- Voxtral Realtime (Voxtral Mini 4B Realtime 2602) for low-latency streaming transcription, released as open weights.

Both models are designed for 13 languages: English, Chinese, Hindi, Spanish, Arabic, French, Portuguese, Russian, German, Japanese, Korean, Italian, and Dutch.

Model family: batch and streaming, with clear roles

Mistral positions Voxtral Transcribe 2 as ‘two next-generation speech-to-text models’ with state-of-the-art transcription quality, diarization, and ultra-low latency.

- Voxtral Mini Transcribe V2 is the batch model. It is optimized for transcription quality and diarization across domains and languages and exposed as an efficient audio input model in the Mistral API.

- Voxtral Realtime is the streaming model. It is built with a dedicated streaming architecture and is released as an open-weights model under Apache 2.0 on Hugging Face, with a recommended vLLM runtime.

A key detail: speaker diarization is provided by Voxtral Mini Transcribe V2, not by Voxtral Realtime. Realtime focuses strictly on fast, accurate streaming transcription.

Voxtral Realtime: 4B-parameter streaming ASR with configurable delay

Voxtral Mini 4B Realtime 2602 is a 4B-parameter multilingual realtime speech-transcription model. It is among the first open-weights models to reach accuracy comparable to offline systems with a delay under 500 ms.

Architecture:

- ≈3.4B-parameter language model.

- ≈0.6B-parameter audio encoder.

- The audio encoder is trained from scratch with causal attention.

- Both encoder and LM use sliding-window attention, enabling effectively “infinite” streaming.

Latency vs accuracy is explicitly configurable:

- Transcription delay is tunable from 80 ms to 2.4 s via a transcription_delay_ms parameter.

- The Mistral describes latency as “configurable down to sub-200 ms” for live applications.

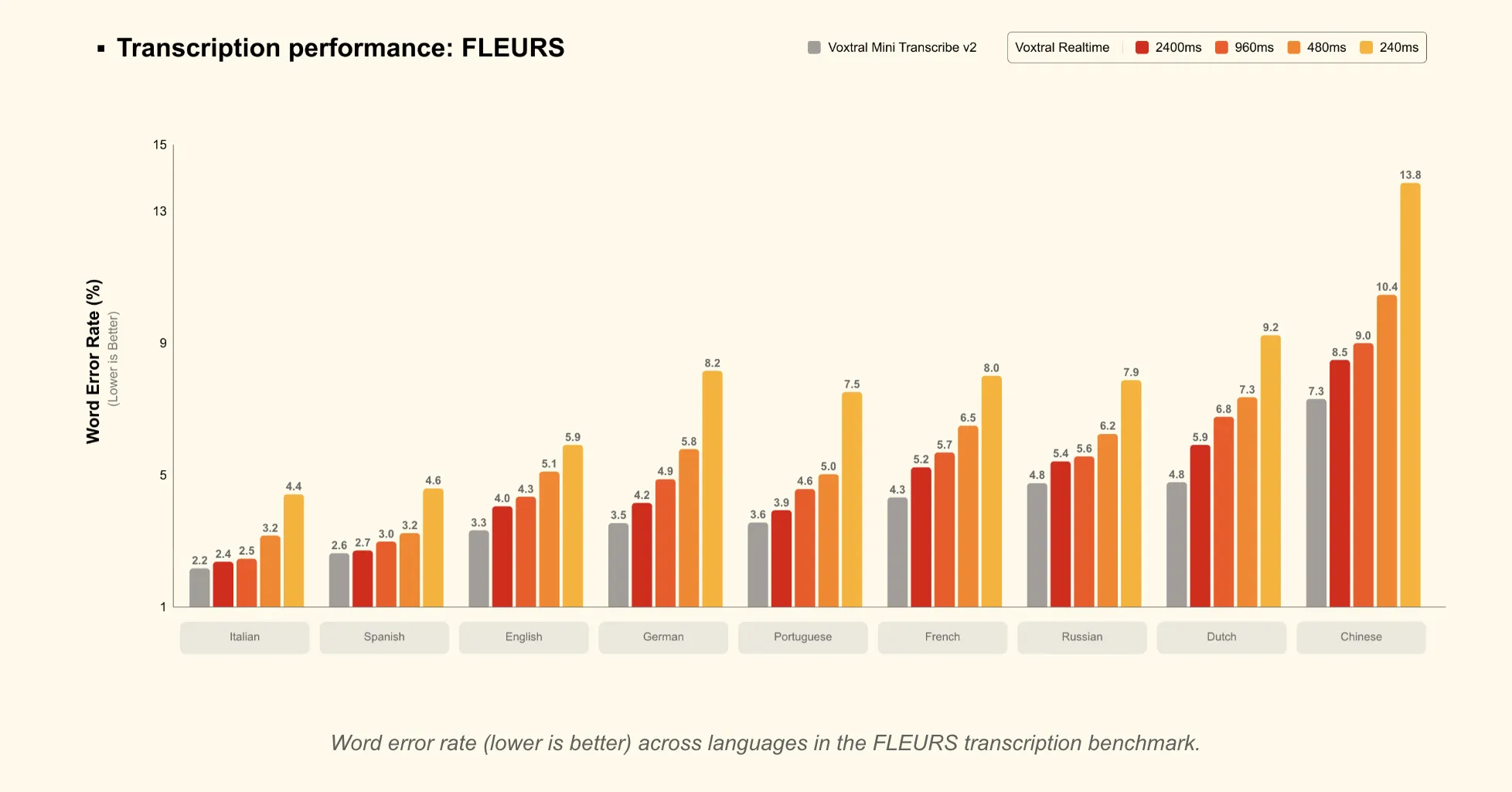

- At 480 ms delay, Realtime matches leading offline open-source transcription models and realtime APIs on benchmarks such as FLEURS and long-form English.

- At 2.4 s delay, Realtime matches Voxtral Mini Transcribe V2 on FLEURS, which is appropriate for subtitling tasks where slightly higher latency is acceptable.

From a deployment standpoint:

- The model is released in BF16 and is designed for on-device or edge deployment.

- It can run in realtime on a single GPU with ≥16 GB memory, according to the vLLM serving instructions in the model card.

The main control knob is the delay setting:

- Lower delays (≈80–200 ms) for interactive agents where responsiveness dominates.

- Around 480 ms as the recommended “sweet spot” between latency and accuracy.

- Higher delays (up to 2.4 s) when you need accuracy as close as possible to the batch model.

Voxtral Mini Transcribe V2: batch ASR with diarization and context biasing

Voxtral Mini Transcribe V2 is a closed-weights audio input model optimized only for transcription. It is exposed in the Mistral API as voxtral-mini-2602 at $0.003 per minute.

On benchmarks and pricing:

- Around 4% word error rate (WER) on the FLEURS transcription benchmark, averaged over the top 10 languages.

- “Best price-performance of any transcription API” at $0.003/min.

- Outperforms GPT-4o mini Transcribe, Gemini 2.5 Flash, Assembly Universal, and Deepgram Nova on accuracy in their comparisons.

- Processes audio ≈3× faster than ElevenLabs’ Scribe v2 while matching quality at one-fifth the cost.

Enterprise-oriented features are concentrated in this model:

- Speaker diarization

- Outputs speaker labels with precise start and end times.

- Designed for meetings, interviews, and multi-party calls.

- For overlapping speech, the model typically emits a single speaker label.

- Context biasing

- Accepts up to 100 words or phrases to bias transcription toward specific names or domain terms.

- Optimized for English, with experimental support for other languages.

- Word-level timestamps

- Per-word start and end timestamps for subtitles, alignment, and searchable audio workflows.

- Noise robustness

- Maintains accuracy in noisy environments such as factory floors, call centers, and field recordings.

- Longer audio support

- Handles up to 3 hours of audio in a single request.

Language coverage mirrors Realtime: 13 languages, with Mistral noting that non-English performance “significantly outpaces competitors” in their evaluation.

APIs, tooling, and deployment options

The integration paths are straightforward and differ slightly between the two models:

- Voxtral Mini Transcribe V2

- Served via the Mistral audio transcription API (/v1/audio/transcriptions) as an efficient transcription-only service.

- Priced at $0.003/min. (Mistral AI)

- Available in Mistral Studio’s audio playground and in Le Chat for interactive testing.

- Voxtral Realtime

- Available via the Mistral API at $0.006/min.

- Released as open weights on Hugging Face (mistralai/Voxtral-Mini-4B-Realtime-2602) under Apache 2.0, with official vLLM Realtime support.

The audio playground in Mistral Studio lets users:

- Upload up to 10 audio files (.mp3, .wav, .m4a, .flac, .ogg) up to 1 GB each.

- Toggle diarization, choose timestamp granularity, and configure context bias terms.

Key Takeaways

Check out the Technical details and Model Weights. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Michal Sutter is a data science professional with a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex datasets into actionable insights.